In the world of academic publishing and online scholarship, accurate download statistics matter more than ever to authors, administrators, and institutions. Yet it’s never been more challenging to distinguish between computers and human readers–robots are becoming more sophisticated, and filtering methods need to evolve on a continuous basis to keep pace. Bepress recognizes this need, and our engineering team is dedicated to staying on the leading edge of technological advances that go beyond accepted standards in order to provide the best possible measures of impact.

Bepress recently joined the COUNTER working group on robots and is excited to establish shared recommendations and codes of practice together. In addition, we were pleased to present at Open Repositories 2016 and share specifications based on our experience using scalable solutions for over 500 Digital Commons customers.

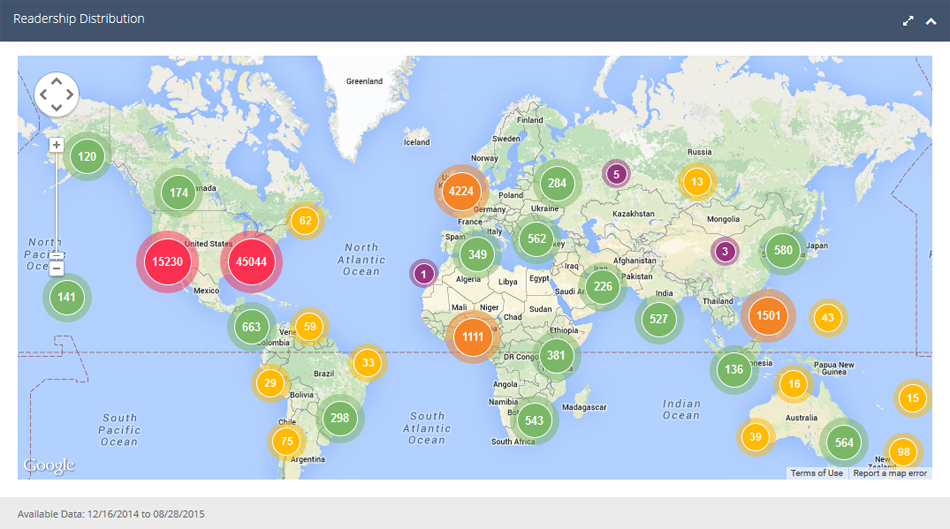

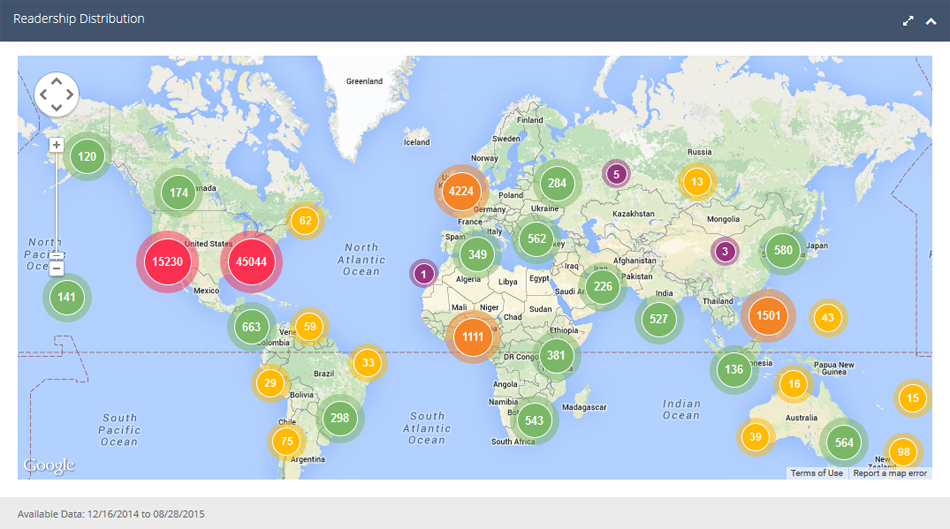

We’ve developed real-time detection methods so that accurate numbers power the impact maps and dashboards. In order to capture the complexities of filtering in real time we’ve created a weighted algorithm to go beyond simple metadata associated with hits such as the user agent identification string. Many artificial forces can skew usage statistics and inflate download numbers. Our methodology addresses download time intervals, activity patterns, proxy servers, referers, geolocation, multiple user agents, usage type determinations from third parties, and more. This technology also provides reliable usage statistics for the SelectedWorks Suite of profile pages and Expert Galleries.

As the technology evolves, our filtering methods are constantly evolving to anticipate the tools needed for accurate impact measurements. In the recent webinar Bot Shields: Activate! Ensuring Reliable Repository Download Statistics, bepress engineer Stefan Amshey detailed our multi-faceted strategy—more than a decade in the making—that helps ensure accurate download metrics across all Digital Commons repositories.

Demonstrable, accurate, up-to-the-minute data about your institution’s scholarly impact is critical in demonstrating academic excellence. We hope this behind-the-scenes work offers an accurate way to demonstrate the benefits of openly available research. Additionally, a more uniform, reproducible code of practice will make it less problematic to compare download counts across scholarly platforms. We routinely investigate download counts, and invite you to contact us at dc-support@bepress.com if you have questions.